Server Management

Server management is the maintenance and operation of a server. While this can mean many things, the main idea behind server management is uptime. The whole purpose of a server is to have a reliable resource for users to interact with. Management of a server can vary depending on the size of the server and it’s purpose. Different types of servers require different types of management plans. The following sections discuss “standard” server management techniques. These techniques may need modification depending your servers’ role.

Web Server Management

Assuming you run a dedicated web server, there are several tasks that should be considered routine:

* BANDWIDTH

* Log check/rotation

* Critical patches

Depending on where this server is located, you will need to constantly monitor the bandwidth. If your server is co-located, you may want to consider bandwidth throttling. If your server is hit with a DoS and all the sudden starts eating Megabits/second, you should have a plan of action. There are many applications available that allow you trap SNMP information from multiple servers and networks to one centralized reporting server. Personally, I manage many different servers on many different networks. I use an application called cacti, to collect all types of information from each server/network.

Depending on where this server is located, you will need to constantly monitor the bandwidth. If your server is co-located, you may want to consider bandwidth throttling. If your server is hit with a DoS and all the sudden starts eating Megabits/second, you should have a plan of action. There are many applications available that allow you trap SNMP information from multiple servers and networks to one centralized reporting server. Personally, I manage many different servers on many different networks. I use an application called cacti, to collect all types of information from each server/network.

Checking your logs is also an important part of managing a web server. This can get hairy if you manage a server with multiple domains or a server with a large amount of traffic. However, if you check the traffic graphs and notice some unusual spikes, you many want to dig through the logs to find the root of the spikes. Depending on the servers purpose, you may want to manually rotate the log files, or archive them to another box on the network. Setting up a good logging policy is important, and also complicated. A log rotation strategy should be thoroughly planned prior to production and later checked for effectiveness. This type of planning would be different depending on the role of your server and also which platform you are using. Linux and Windows machine logs are fairly different and there is no “one plan works for all” strategy.

Critical Patches are also very important. While the Apache web server isn’t patch often, (especially if you are still using 1.3.x) there are other packages of a Linux system that need to be patched. If you are running IIS, there are updates to many aspects of the OS and web server that need to applied. As a system administrator, network engineer, etc. you should know the schedule of when Microsoft and other vendors release these patches. Personally, I manage many servers running Red Hat Enterprise Linux. These servers report to The Red Hat Network, which in turn sends emails to my blackberry alerting me of new patches for each machine. Most vendors release these patches at a certain time every month, and you should know when that is.

Management of a web server can be tricky business. Obviously, uptime is the ultimate goal with security as a close second. Just be careful when you do reboots, consider time zones, and the users that will be effected if and when the server is rebooted. You may want to go the “scheduled maintenance” route so users are not surprised when the server is down.

File Server Management

Assuming you run a dedicated file server, there are several tasks that should be considered routine:

* Login/logout times

* Backups

* Critical patches

File server management is somewhat different than that of a web server. File servers that housed on a corporate LAN generally have “off-peak” hours. This makes it much easier to install updates and make changes because the you have a larger window with nobody using the server. However, if your server is attached to a VPN, you may still have some users logged in late at night or on the weekends. It is always a good practice to post hours of maintenance or downtime. Depending on how many users access your file server and what exactly it is serving, you many want to test security patches on a ghost server before loading them onto production machines. This can be even more complicated if you have a cluster of file servers. Most servers in the enterprise are not immediately patched. Each patch is tested and may be modified before they are applied to production machines.

File server management is somewhat different than that of a web server. File servers that housed on a corporate LAN generally have “off-peak” hours. This makes it much easier to install updates and make changes because the you have a larger window with nobody using the server. However, if your server is attached to a VPN, you may still have some users logged in late at night or on the weekends. It is always a good practice to post hours of maintenance or downtime. Depending on how many users access your file server and what exactly it is serving, you many want to test security patches on a ghost server before loading them onto production machines. This can be even more complicated if you have a cluster of file servers. Most servers in the enterprise are not immediately patched. Each patch is tested and may be modified before they are applied to production machines.

Management of a file server is generally easier than web server. However, if you are the in-house I.T. person, you are probably going to catch some heat from everyone in the office if something breaks. The bottom line is that you should always take your time when deploying patches. Do a full backup of critical data and local machine settings. Of course, test before and after patching. You should be in the habit of checking the login/logout times of users. Generally a spot check will do. Personally, I just check for anything out of the ordinary. For instance, a VPN user logging in at 2 PM from unrecognized IP address should be a red flag. It is fairly easy to spot things out of the ordinary when you get used to the usage pattern of your end users.

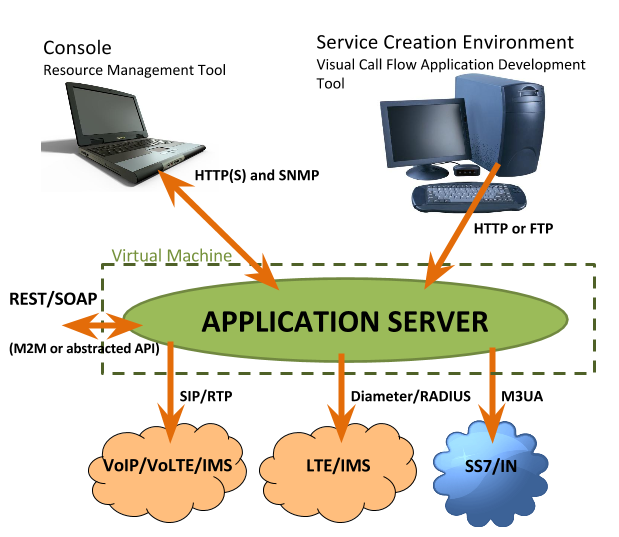

Application Server Management

Application servers can be very tricky to manage. There are many reasons for this:

* Heavy traffic/user load

* Customized programming

* Proprietary software

Some application servers are utilized by both internal staff and end users from a public network like the Internet. This can make scheduling downtime a hassle. Application servers are usually the core of a business. If their application server ceases to run, business can come to a stand-still. Personally, I manage an application server for a multi-million dollar financial institution. I take maintenance issues with those servers VERY seriously. This usually involves documenting times, details, and turning off my phone while I work. Making a simple mistake during the maintenance of an application server can be the difference between the machine being functional when the end users get back to the office.

Some application servers are utilized by both internal staff and end users from a public network like the Internet. This can make scheduling downtime a hassle. Application servers are usually the core of a business. If their application server ceases to run, business can come to a stand-still. Personally, I manage an application server for a multi-million dollar financial institution. I take maintenance issues with those servers VERY seriously. This usually involves documenting times, details, and turning off my phone while I work. Making a simple mistake during the maintenance of an application server can be the difference between the machine being functional when the end users get back to the office.

Depending on what type of application server you are running, you may have a custom tailored code base that was developed by an in-house or 3rd party programmer. Remember that certain security patches and specifically service packs may interfere with their programming. This is another time when it helps to have a ghost server or spare machine to test.

Another problem may arise if you are using some type of proprietary software. When OS security patches and specifically service packs are released, you should call the software vendor and ask if there are any known issues before deploying updates and/or service packs. Generally, you won’t run into any problems with critical security patches, but be aware of updates that change core DLLs in your OS. Personally, I have had some problems with this before. This is usually the fault of the software developer and not Microsoft. For a change 😉

Other Tips

Always take your time when working on servers. Do your prep work and make sure you have good backups before you start making changes. If you are working on something mission critical, it is always a good idea to have another admin sit with you as you work on it. Two eyes are always better than one, and I can tell you from personal experience that someone will remember something the other didn’t.

Personal Disasters

Personally, I have screwed up my fair share of things over the years. Everyone does it, you just hope that things won’t be too hard to fix 😉 I will share a few disasters with you so you will be aware of what can happen.

* Working Remotely

* Overloading an UPS

* DNS updates

* Microsoft Exchange Unmounts

Working remotely has it’s pros and cons. I love being able to manage servers and networks from my office. On the other hand, if something goes wrong, I end up waiting outside my client’s office in the morning. NOT FUN! I’ve screwed up a few things while working remotely. For instance, I generally do updates on servers and networks around 11 PM – 2AM. This way, nobody is using the server; at least they shouldn’t be! I had a situation a few years ago when I installed service pack 3 on a Windows 2000 Server. Little did I know that my client had put a floppy disk in the drive for whatever reason. Of course, when I rebooted the machine to complete the service pack installation, the machine was stuck because it was trying to boot from the floppy. I didn’t realize what had happened until I physically when down to the client site to see what happened to the server. Needless to say, now I always check to see if there are disks in drives before I reboot. I’ve also installed firewall software remotely, and ended up locking myself out because the firewall denied my remote access. I have also screwed things up by changing the IP addresses of a server. While that isn’t always a bad thing, it is if you forget to update the port forwarding in the router. Once the IP was changed, I lost my connection, and the RDP was still mapped through the router to the old IP. I was helpless at that point 🙁

An UPS- Uninterrupted Power Supply, is a device that allow a machine to continue running during a power outage. Every server should have one! Almost 1 year ago, I was working on an application server for a client. It was a slightly older machine, and the SCSI drives were configured in RAID0 for performance. There was a web server in the same rack but only one UPS. It was a 300VA UPS, so I figured it would work fine for power sags or a short outage. So I powered down the application server and plugged it into the UPS. So the UPS was now running two 275 watt power supplies. I powered the application server back on and everything was fine. The server started to POST and the SCSI drives were about to initialize. As the drives started to spin up (increasing the power output of the UPS) the UPS immediately locked up. Both machines instantly lost power. When I re-configured the power assignments again, the application server had apparently freaked out and one of the drives was corrupted. Since the server was using RAID0, there was no way for me to rebuild the data from the RAID host card. I was stuck there re-installing the OS, and pulling data from the backup drive. Thank goodness it was after hours and I had a good backup. The only problem I ran into was re-creating the user accounts, but nonetheless, it was not a fun project.

DNS is a great thing! While it’s operation is somewhat complicated, it is a very powerful technology and the Internet would not exist without it. However, one day I decided that I was going to move all the sites I was hosting (at that point around 25 or so) to a different server. Since that server was in a different data center, this meant changing the DNS for the domains. Keep in mind this was before I started using DNS fail-over. To make a long story short, I updated all the DNS settings, thinking I had enough time to move all the accounts, data, and email to the new server. While they claim it takes around 24 hours to update this information the root DNS servers, I have seen this process take as little as 4 hours. As you can imagine, the root DNS updates took effect quicker than I had anticipated. I had about 10 clients without email for 5 hours until I finished moving the accounts. Today, I have DNS fail-over in place, and most of my clients have accounts on 2 different servers. I also update my DNS entries last now…

Microsoft Exchange Server is a neat little thing. It works especially well for small to medium sized businesses that can really reap the benefits of it’s shared contacts and calendaring. However, if don’t keep an eye on exchange and let it get out of hand, you’ll soon have some trouble and frustration on your hands. I run a live exchange server at my house for development and testing purposes. Soon after service pack 2 was released, I rolled it out. One of the big selling points of SP2 was an increased storage size of something around 73 GB. So, I installed it. My exchange server has two RAID0 arrays. One array is 36 GB, and the other is 100 GB. However, I forgot that the exchange storage was actually on the first array. So by enabling the full 73 GB storage, the drive eventually became completely full and the exchange data-store had UN-mounted itself. This wasn’t really a problem because that machine pretty much downloads junk-only messages so I can test SPAM filters and other email tools. However, it is something to be aware of.

India’s Premier Server Management Company since 2007, Specialist in providing technical support services for Linux based Cpanel servers with complete server security services. As a technical support provider our expertise is outlined to handle cpanel server management to optimize critical production servers and processes with improved server optimization, guaranteed system availability, and reduce overall infrastructure costs.